This article is more than 1 year old

Intel's Habana unit reveals new Nvidia A100 challengers

Gaudi 2, Greco AI chips, plus more details on Arctic Sound-M server GPUs

Intel Vision Intel is ramping up its efforts to take on GPU giant Nvidia in the accelerated computing space with a strategy that focuses on a diverse portfolio of silicon built for different purposes.

More than two years after acquiring AI chip startup Habana Labs for $2 billion, Intel's deep learning unit is revealing two new chips, Gaudi2 for training and Greco for inference. The x86 giant claims the former can leapfrog Nvidia's two-year-old A100 GPU in performance, at least based on their own benchmarking.

The reveal of Habana's second-generation deep learning chips was among several announcements Intel made on Tuesday during the Intel Vision event in Grapevine, Texas. Intel also shared new details for the multi-purpose, media-focused Arctic Sound-M server GPU, which is set to debut in systems in the third quarter.

Gaudi2 was launched today, and Greco, the successor to Habana's Goya chip, is expected to sample with customers in the second half of this year.

Intel claims that both chips are addressing a gap in the training and inference space with "high-performance, high-efficiency deep learning compute choices" aimed at lowering the barrier to entry for companies exploring AI/ML.

Is Gaudi2 coming too late?

Gaudi2 is built on a 7nm process, a major improvement in nodes from Gaudi's 16nm, packing 24 Tensor Processor Cores and 96GB of HBM2e high-bandwidth memory, as well tripling the number of cores and amount of HBM2 memory of the chip's predecessor. Memory bandwidth has also nearly tripled to 2.45TB/s while SRAM has doubled to 48 MB. Throughput is also significantly improved, expanding the chip's networking capacity to 24 100 GbE ports from the first Gaudi's 10 100 GbE ports.

But this all comes at a higher energy cost, with Gaudi2's thermal design power (TDP) maxing out to 600 watts compared to Gaudi's 350 watts.

In exchange for all that heat, Intel is claiming major performance leaps over Nvidia's A100, which is also made on a 7nm processor but only supports a maximum of 80GB of HBM2e memory.

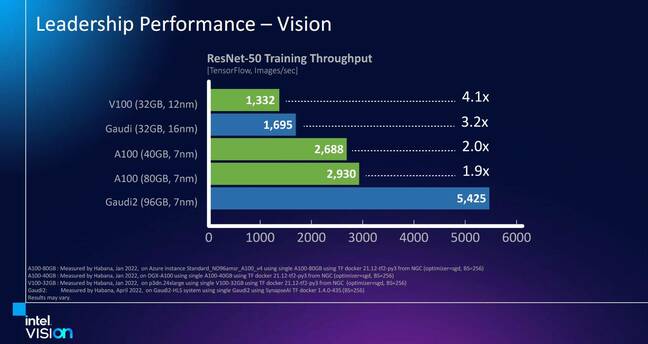

Compared to the 80GB A100, Intel says its internal benchmark finds Gaudi 2 is 1.9X faster for training the ResNet-50 image classification model and 2X faster for the first two phases of the BERT natural language processing model.

A graph comparing Intel's Habana Gaudi2 to other chips, including Nvidia's A100, for ResNet-50 training performance. (Click to expand.)

Intel is having its time in the sun during the Vision event but remember, Nvidia plans to launch the A100 successor, the H100, in the third quarter, and the GPU will feature 80GB of HBM3 memory and up to 3TBps of memory bandwidth. Given Nvidia's claim that the H100 has three times higher performance-per-watt than the A100, there's a big question of whether Gaudi 2 can keep up.

Speaking of Nvidia, it's difficult to play catchup with the extensive investments in AI systems software the GPU giant has made over the last several years. For its part, Intel said users can train deep learning models on Gaudi processors using the SynapseAI software suite, which supports the TensorFlow and PyTorch frameworks and requires "minimal code changes."

Server maker Supermicro came out of the gate first with systems support for Gaudi2 via the new Supermicro X12 Gaudi2 training server, and the training chip is also getting storage support via DDN's AI400X2 storage system.

Gaudi2 is arriving not long after the first-generation training chip made its debut in cloud instances offered by Amazon Web Services last October.

Early test cases for Gaudi are limited of course, but Intel points to its deployment inside Intel's own Mobileye business unit.

- We're gonna bounce back, says Intel's Gelsinger: Don't worry, most of our chips will be made by us... in 2023

- The hour grows late, the enemy are at the gates... but could Intel's exiled heir apparent ride to the rescue?

- Intel is doing so well at AI acceleration, it's dropped $2bn on another neural-net chip upstart (third time's a charm)

- Intel sticks with FPGAs and ASICs for next-gen IPUs

Gaby Hayon, a Mobileye executive, said multiple teams in the division are using Gaudi in the cloud or on-premises, and they "consistently see significant cost savings relative to existing GPU-based instances across model types, enabling them to achieve much better time-to-market for existing models or training much larger and complex models aimed at exploiting the advantages of the Gaudi."

The non-Intel company vouching for Gaudi is Leidos, a Reston, Virginia-based biomedical research company, which said it has seen a greater than 60 percent cost savings for training models used for X-ray scans with AWS' Gaudi-powered DL1 instance compared to the p3dn.24xlarge instance that is powered by Nvidia's five-year-old V100 GPU.

Greco inference chip and Arctic Sound-M GPU teased

Later this year, Intel expects to announce two more chips for different kinds of accelerated computing, with Habana's Greco inference chip set to start sampling with customers in the second half of the year and the multi-purpose Arctic Sound-M server GPU launching in the third quarter.

Like Gaudi2, Greco is jumping to a 7nm process from its predecessor's 16nm process. While the 16GB memory capacity is the same as the first-generation chip, Greco is moving to LPDDR5 from Goya's DDR4, which significantly increases the memory bandwidth to 204GB/s from 40GB/s.

Unlike Gaudi2, Greco is getting smaller, moving to a single-slot half-height, half-length PCIe form factor from Goya's dual-slot form factor. And it requires less power, with a thermal design power of 75 watts compared to the 200 watts for the first-generation chip.

Intel's accelerated computing platforms are starting to gain momentum. For instance, at Intel Vision, the company also let loose its Arctic Sound-M, a server GPU designed to be a "super flexible" product optimized for cloud gaming, media processing, virtual desktop infrastructure and inference. The server GPU will be available in a PCIe Gen 4 card, and it will be available in more than 15 systems from Cisco, Dell Technologies, Hewlett Packard Enterprise, Supermicro, Inspur, and H3C.

Arctic Sound-M relies on the same Xe HPG microarchitecture as Intel's new Arc discrete GPUs for PCs, and this means the server GPU sports similar features, with up to four Xe Media Engines, up to 32 Xe cores and ray tracing units, AI acceleration via XMX Matrix Engines, and hardware acceleration for AV1 video encoding and decoding, which Intel said is an industry-first for a server GPU.

The chipmaker said these specs translate into support for more than 30 1080p streams for video transcoding, more than 40 game streams for cloud gaming, up to 62 virtualized functions for virtual desktop infrastructure, and up to 150 tera operations per second for media AI analytics.

Intel will offer the Arctic Sound-M server GPU in two power envelope types: 150 watts for maximum peak performance and 75 watts for high-density, multi-purpose use.

Intel didn't provide competitive comparisons for either the Greco or Arctic Sound-M chips.

With Intel still planning to also release its Ponte Vecchio GPU for AI and high-performance computing workloads later this year, you may be wondering whether Intel's GPUs and Habana deep learning chips are creating too much overlap in the company's portfolio.

In the pre-briefing with journalists, Habana Labs COO Eitan Medina explained that Intel is offering CPUs, GPUs and deep learning chips for AI purposes in the datacenter because "different customers are using a different mix of applications for different servers."

For instance, Intel's Xeon server CPUs are best suited for data pre-processing, inferencing, and a broad range of applications in the same server, according to Medina, while Intel's GPUs are a better fit for servers that have a high mix of AI, HPC and graphics workloads. Habana's chips, on the other hand, are geared towards servers that are mostly used for deep learning workloads.

Intel is hoping this strategy can slow down Nvidia's fast-growing accelerated computing and AI businesses while also helping the x86 giant diversify beyond its traditional CPU business, which is facing increased competition from AMD as well as several companies working on chips using the Arm or RISC-V instruction set architectures. ®